No related branches found

No related tags found

Showing

- book/_toc.yml 17 additions, 0 deletionsbook/_toc.yml

- book/sandbox/continuous/GOF.md 130 additions, 0 deletionsbook/sandbox/continuous/GOF.md

- book/sandbox/continuous/PDF_CDF.md 84 additions, 0 deletionsbook/sandbox/continuous/PDF_CDF.md

- book/sandbox/continuous/Param_distr.md 22 additions, 0 deletionsbook/sandbox/continuous/Param_distr.md

- book/sandbox/continuous/Reminder_intro.md 11 additions, 0 deletionsbook/sandbox/continuous/Reminder_intro.md

- book/sandbox/continuous/empirical.md 88 additions, 0 deletionsbook/sandbox/continuous/empirical.md

- book/sandbox/continuous/figures/GOF_data.png 0 additions, 0 deletionsbook/sandbox/continuous/figures/GOF_data.png

- book/sandbox/continuous/figures/QQplot.png 0 additions, 0 deletionsbook/sandbox/continuous/figures/QQplot.png

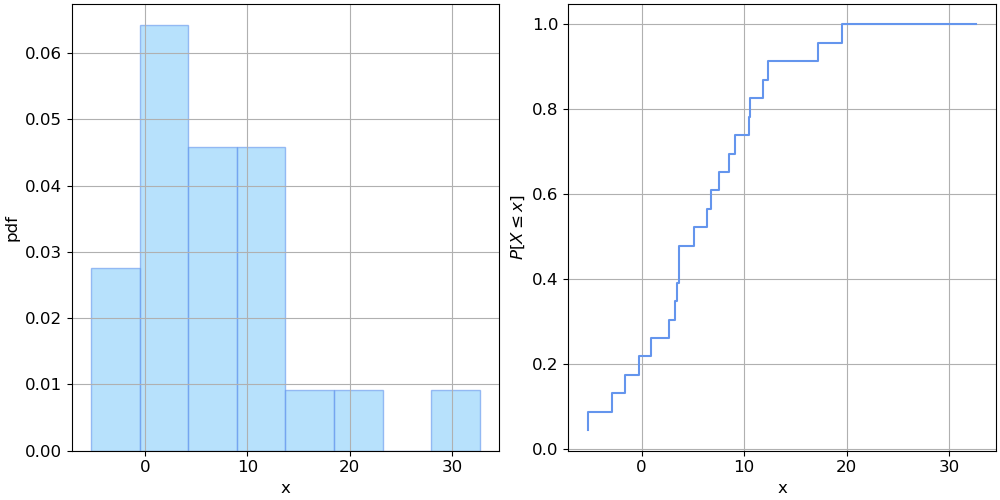

- book/sandbox/continuous/figures/data_overview.png 0 additions, 0 deletionsbook/sandbox/continuous/figures/data_overview.png

- book/sandbox/continuous/figures/ecdf_wind.png 0 additions, 0 deletionsbook/sandbox/continuous/figures/ecdf_wind.png

- book/sandbox/continuous/figures/epdf_wind.png 0 additions, 0 deletionsbook/sandbox/continuous/figures/epdf_wind.png

- book/sandbox/continuous/figures/gaussian.png 0 additions, 0 deletionsbook/sandbox/continuous/figures/gaussian.png

- book/sandbox/continuous/figures/log-scale.png 0 additions, 0 deletionsbook/sandbox/continuous/figures/log-scale.png

- book/sandbox/continuous/figures/sketch_KS.png 0 additions, 0 deletionsbook/sandbox/continuous/figures/sketch_KS.png

- book/sandbox/continuous/figures/survival.png 0 additions, 0 deletionsbook/sandbox/continuous/figures/survival.png

- book/sandbox/continuous/fitting.md 16 additions, 0 deletionsbook/sandbox/continuous/fitting.md

- book/sandbox/continuous/other_distr.md 22 additions, 0 deletionsbook/sandbox/continuous/other_distr.md

- book/sandbox/prob/prob-background.md 8 additions, 0 deletionsbook/sandbox/prob/prob-background.md

- book/sandbox/prob/prob-discrete.md 3 additions, 0 deletionsbook/sandbox/prob/prob-discrete.md

- book/sandbox/prob/prob-intro.md 13 additions, 0 deletionsbook/sandbox/prob/prob-intro.md

book/sandbox/continuous/GOF.md

0 → 100644

book/sandbox/continuous/PDF_CDF.md

0 → 100644

book/sandbox/continuous/Param_distr.md

0 → 100644

book/sandbox/continuous/Reminder_intro.md

0 → 100644

book/sandbox/continuous/empirical.md

0 → 100644

book/sandbox/continuous/figures/GOF_data.png

0 → 100644

22.9 KiB

book/sandbox/continuous/figures/QQplot.png

0 → 100644

41.3 KiB

72.2 KiB

17.2 KiB

18.2 KiB

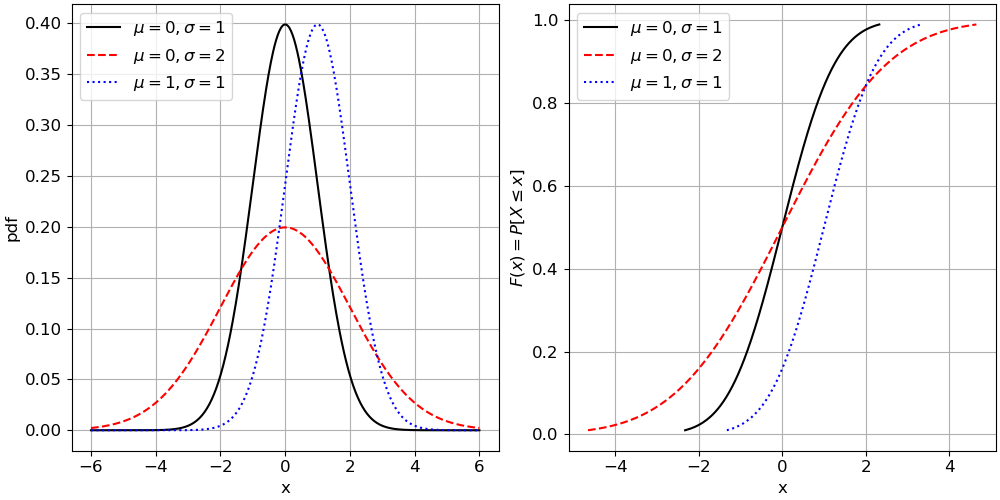

book/sandbox/continuous/figures/gaussian.png

0 → 100644

66.6 KiB

59.6 KiB

23.4 KiB

book/sandbox/continuous/figures/survival.png

0 → 100644

35.3 KiB

book/sandbox/continuous/fitting.md

0 → 100644

book/sandbox/continuous/other_distr.md

0 → 100644

book/sandbox/prob/prob-background.md

0 → 100644

book/sandbox/prob/prob-discrete.md

0 → 100644

book/sandbox/prob/prob-intro.md

0 → 100644