Merge branch 'San_Part' into 'main'

add Chapters of Sandra in sandbox See merge request !17

Showing

- book/_toc.yml 19 additions, 0 deletionsbook/_toc.yml

- book/sandbox/ObservationTheory/01_Introduction.md 1 addition, 1 deletionbook/sandbox/ObservationTheory/01_Introduction.md

- book/sandbox/SanPart/.DS_Store 0 additions, 0 deletionsbook/sandbox/SanPart/.DS_Store

- book/sandbox/SanPart/ObservationTheory/.DS_Store 0 additions, 0 deletionsbook/sandbox/SanPart/ObservationTheory/.DS_Store

- book/sandbox/SanPart/ObservationTheory/01_Introduction.md 74 additions, 0 deletionsbook/sandbox/SanPart/ObservationTheory/01_Introduction.md

- book/sandbox/SanPart/ObservationTheory/02_LeastSquares.md 126 additions, 0 deletionsbook/sandbox/SanPart/ObservationTheory/02_LeastSquares.md

- book/sandbox/SanPart/ObservationTheory/03_WeightedLSQ.md 64 additions, 0 deletionsbook/sandbox/SanPart/ObservationTheory/03_WeightedLSQ.md

- book/sandbox/SanPart/ObservationTheory/04_BLUE.md 86 additions, 0 deletionsbook/sandbox/SanPart/ObservationTheory/04_BLUE.md

- book/sandbox/SanPart/ObservationTheory/05_Precision.md 129 additions, 0 deletionsbook/sandbox/SanPart/ObservationTheory/05_Precision.md

- book/sandbox/SanPart/ObservationTheory/06_MLE.md 83 additions, 0 deletionsbook/sandbox/SanPart/ObservationTheory/06_MLE.md

- book/sandbox/SanPart/ObservationTheory/07_NLSQ.md 86 additions, 0 deletionsbook/sandbox/SanPart/ObservationTheory/07_NLSQ.md

- book/sandbox/SanPart/ObservationTheory/08_Testing.md 5 additions, 0 deletionsbook/sandbox/SanPart/ObservationTheory/08_Testing.md

- book/sandbox/SanPart/ObservationTheory/99_NotationFormulas.md 68 additions, 0 deletions.../sandbox/SanPart/ObservationTheory/99_NotationFormulas.md

- book/sandbox/SanPart/figures/.DS_Store 0 additions, 0 deletionsbook/sandbox/SanPart/figures/.DS_Store

- book/sandbox/SanPart/figures/ObservationTheory/01_Regression.png 0 additions, 0 deletions...ndbox/SanPart/figures/ObservationTheory/01_Regression.png

- book/sandbox/SanPart/figures/ObservationTheory/02_LeastSquares_fit.png 0 additions, 0 deletions...SanPart/figures/ObservationTheory/02_LeastSquares_fit.png

- book/sandbox/SanPart/figures/ObservationTheory/02_LeastSquares_sol.png 0 additions, 0 deletions...SanPart/figures/ObservationTheory/02_LeastSquares_sol.png

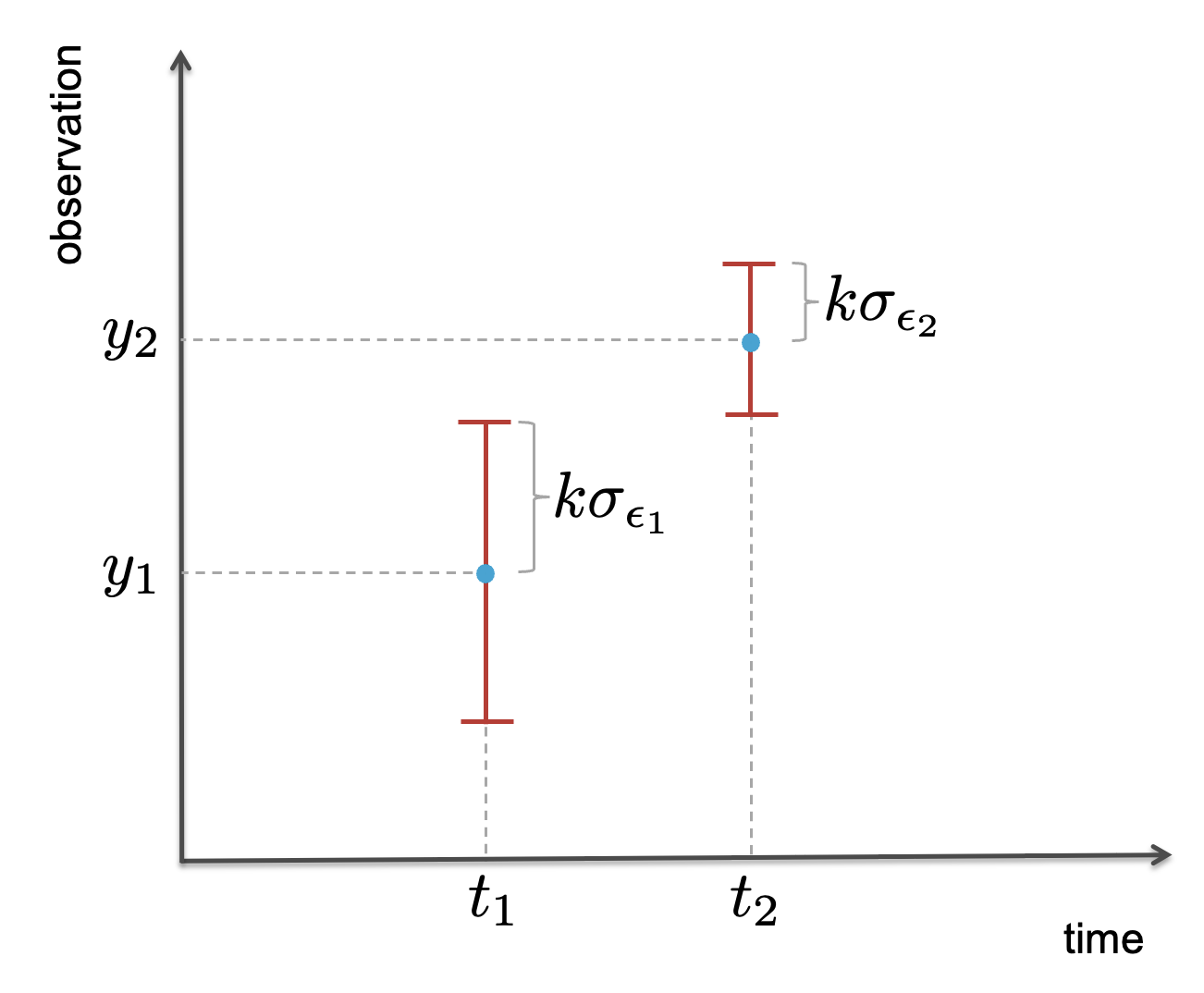

- book/sandbox/SanPart/figures/ObservationTheory/05_CI.png 0 additions, 0 deletionsbook/sandbox/SanPart/figures/ObservationTheory/05_CI.png

- book/sandbox/SanPart/figures/ObservationTheory/05_CI_model.png 0 additions, 0 deletions...sandbox/SanPart/figures/ObservationTheory/05_CI_model.png

- book/sandbox/SanPart/figures/ObservationTheory/05_standard.png 0 additions, 0 deletions...sandbox/SanPart/figures/ObservationTheory/05_standard.png

book/sandbox/SanPart/.DS_Store

0 → 100644

File added

File added

book/sandbox/SanPart/figures/.DS_Store

0 → 100644

File added

95.5 KiB

122 KiB

99.5 KiB

70 KiB

53.6 KiB

64.4 KiB